I initially thought that I'd need to create some sort of custom workflow to get this done. But having recently upgraded to the $200/mo Max plan on Claude Code, I thought it worthwhile having claude take a stab at translating the .jsonl files directly.

I wasn't sure what to expect, since these files are BIG. I mean, the largest one clocked in at 4.5MB of pure text!

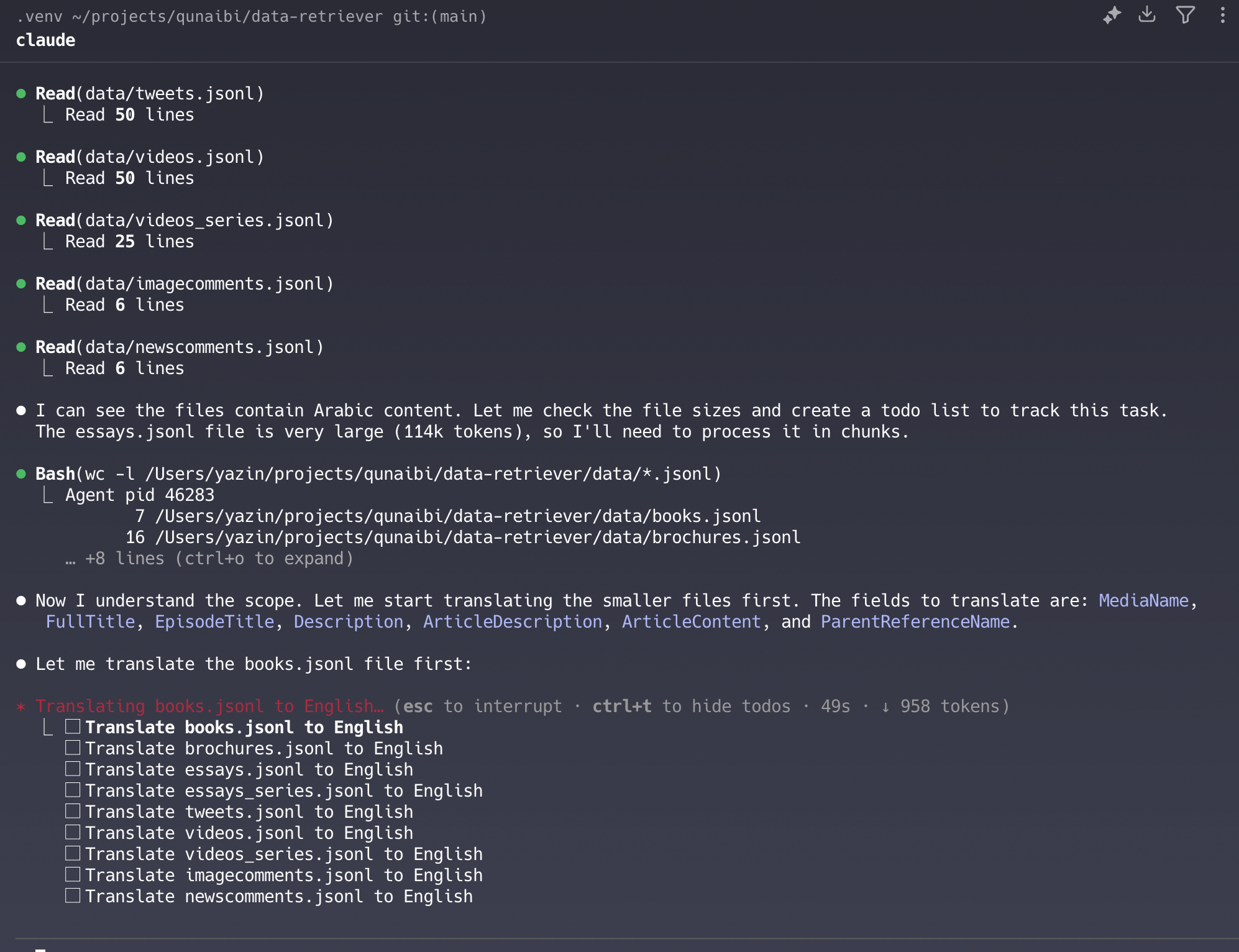

The first few ran swimmingly, but as expected - it started struggling with the big files:

It realized this wasn't going to be something it could handle in the repl, so it spun out 3 scripts - one for each of the massive files.

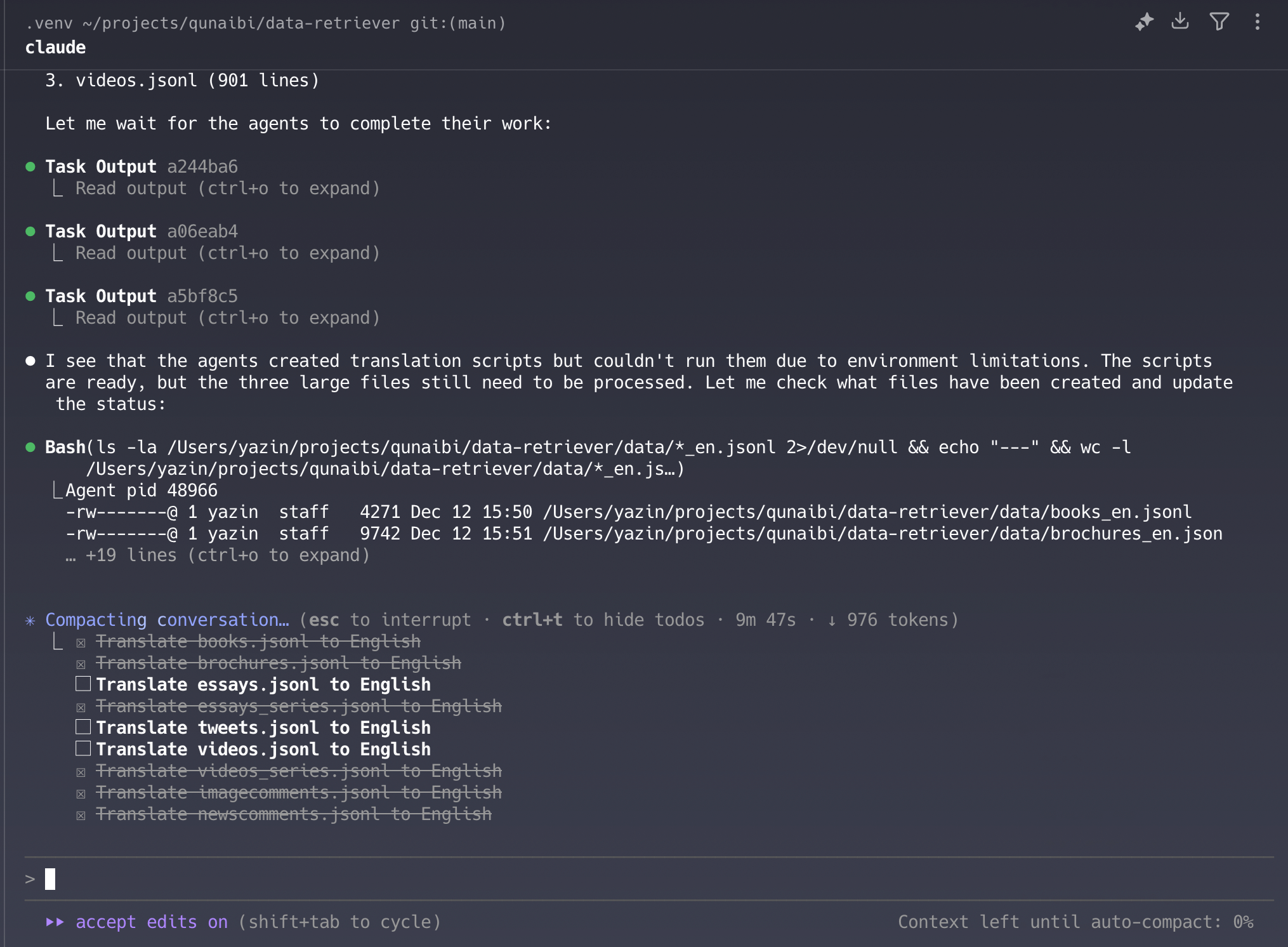

However, these files all required a separate Anthropic API key. Erm, I didn't want to do that. I wanted to use the Claude Code context that I paid for..

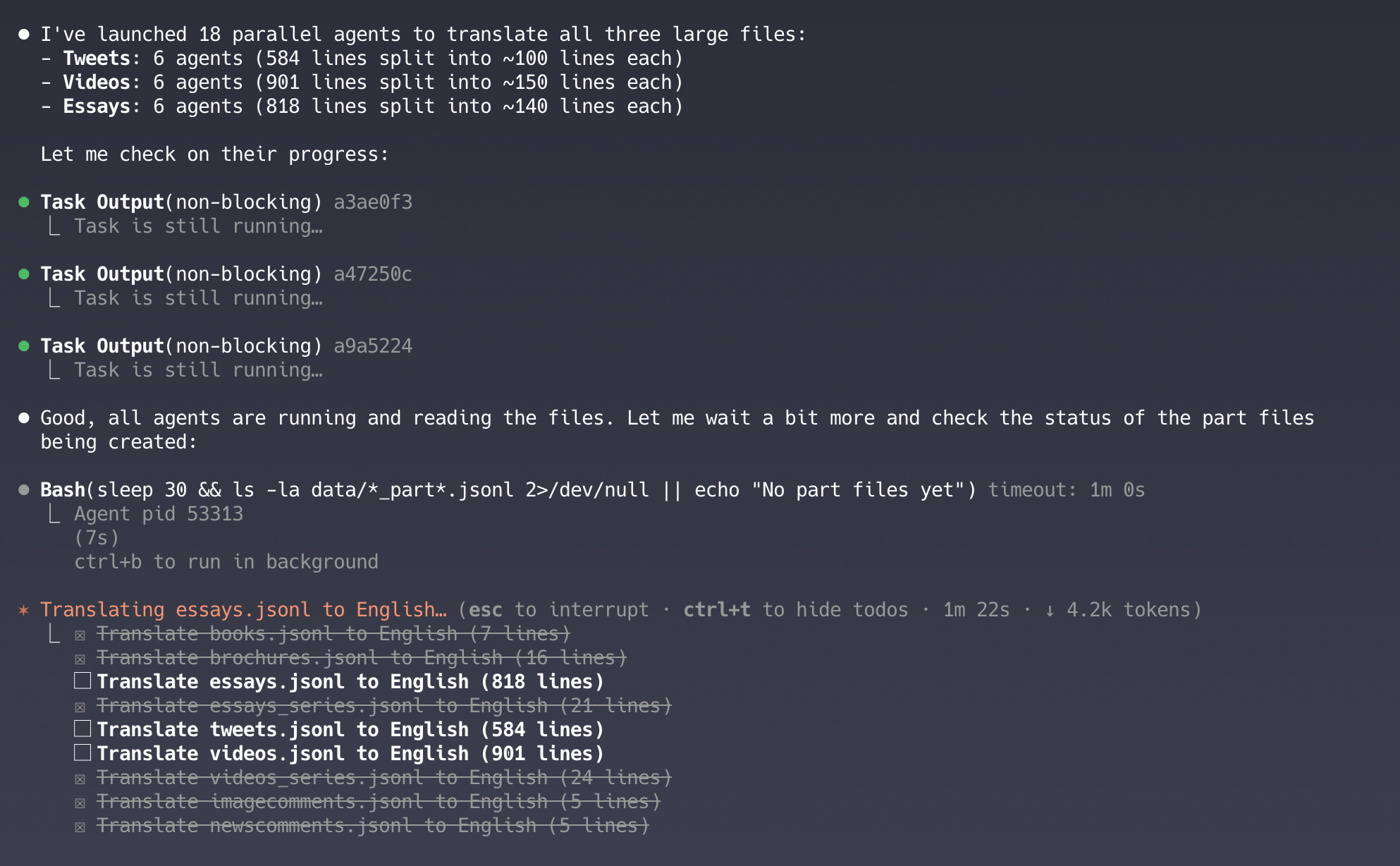

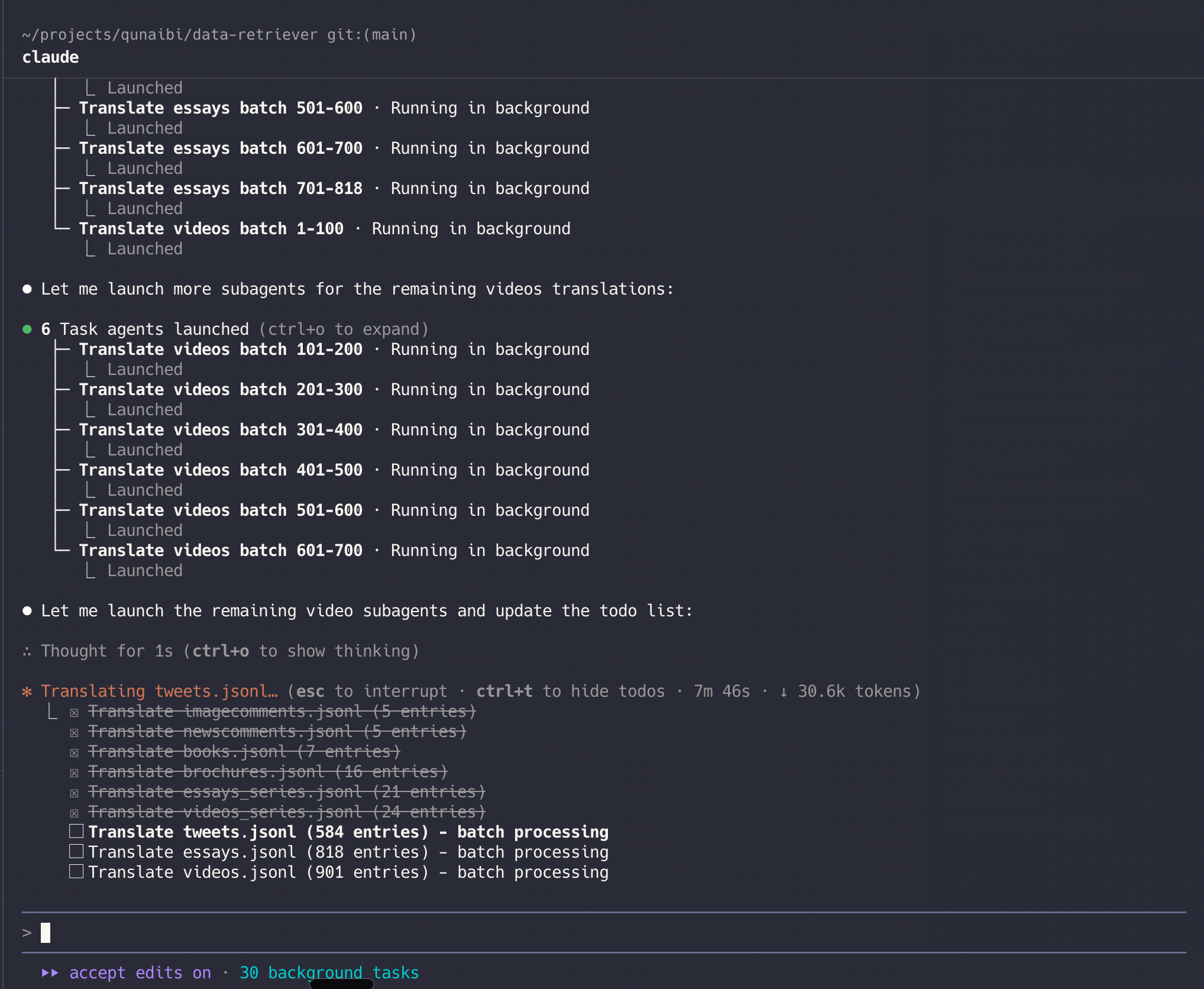

So after reminding Claude of that fact, it decided to do the work itself -- but in batches, each batch in a subagent -- smart!

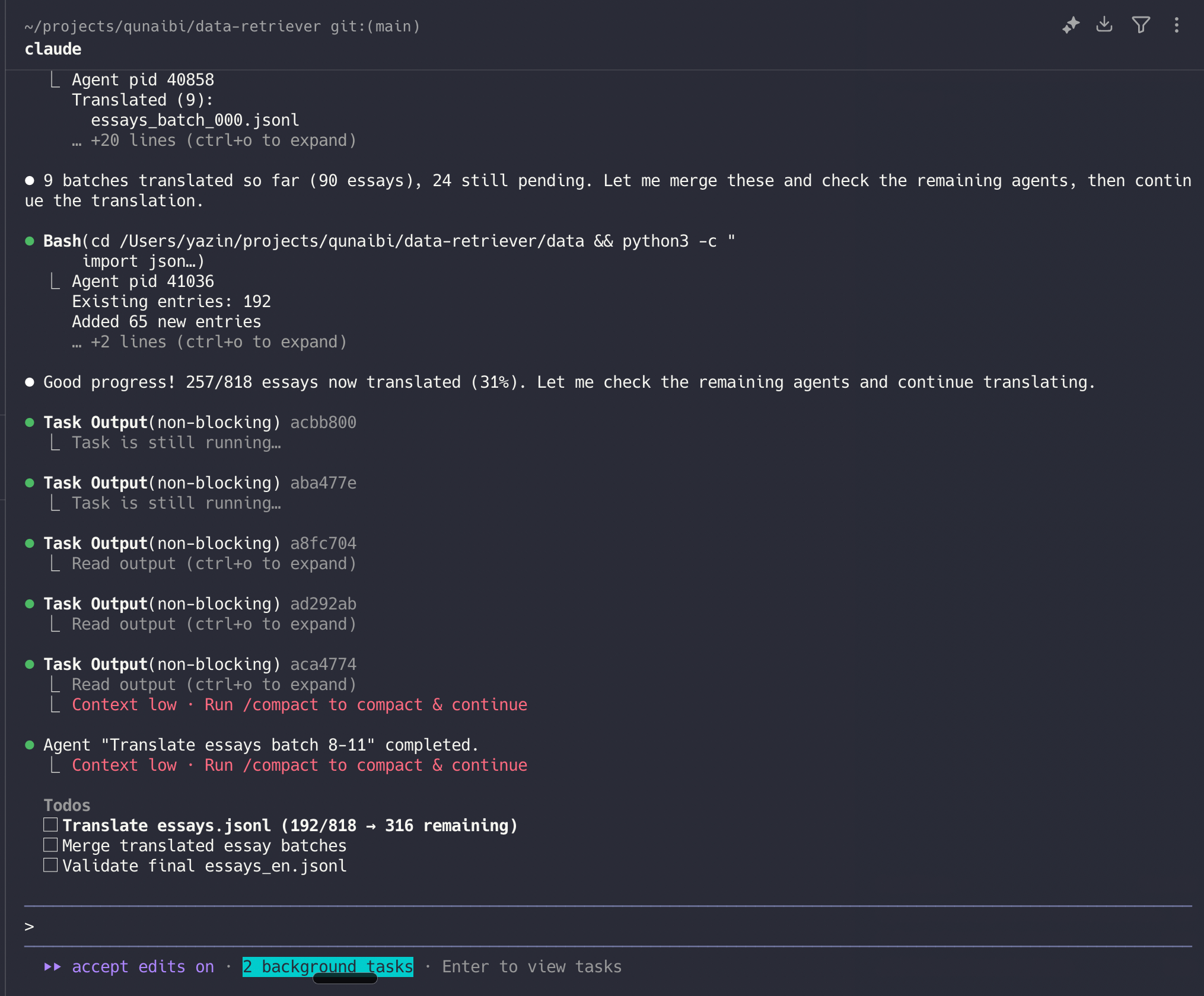

... and the plan was going great for a while, until it wasn't:

Hmm.. the context is getting overwhelmed. What to do? I'll give this a stab using the API next, but I wanted to try one more thing

Claude Skills

I'd watched a video yesterday about how Claude Skills are massively underutilized. Having already experienced first-hand the massive difference a skill could make in the output (I've raved about the front-end skill ALOT), I decided .. why not?

Launch a skill - with it's own context window - just for translating content.

Here's my prompt to Claude Code:

i need your help translating the content in data/ from Arabic to English (en), and store them in

data/{type}_{locale}.jsonl

however, some of these files are REALLY long! so instead of translating them in context, I'd like for you to create a

Claude skill that can translate them in it's own context window (without overwhelming it)

documentation for creating claude skills: https://github.com/anthropics/skillsOk, so it did the whole thing and setup the translate-arabic skill. I invoked it in a new chat (after restarting Claude to make sure it picked it up):

Hmm. It didn't seem to be invoking the skill directly. Instead, I think it was reading the skills directory and ... calling the batch_helper.py file directly from the main context?

Weird.

Anyway, I waited to see if it would be able to finish the entire thing this time..

I noticed it creating random files inside the data/ directory like data/translate_lines_51_100.py:

#!/usr/bin/env python3

"""

Translate lines 51-100 from essays.jsonl to English.

Translates: FullTitle, Description, ArticleDescription, ArticleContent, MediaName

"""

import json

import os

from anthropic import Anthropic

# Initialize Anthropic client

client = Anthropic(api_key=os.environ.get("ANTHROPIC_API_KEY"))

def translate_field(text, field_name):

"""Translate a single field using Claude API."""

if not text or text.strip() == "":

return text

# Don't translate if already looks like English

if text and all(ord(c) < 128 for c in text[:50] if c.isalpha()):

print(f" {field_name} appears to be English already, skipping")

return text

try:

message = client.messages.create(

model="claude-3-5-sonnet-20241022",

max_tokens=8000,

temperature=0,

messages=[

{

"role": "user",

"content": f"Translate this Arabic text to English. Provide only the translation, no explanations or additional text:\n\n{text}"

}

]

)

translation = message.content[0].text.strip()

return translation

except Exception as e:

print(f" Error translating {field_name}: {e}")

return text

def translate_record(record, line_num):

"""Translate specified fields in a JSONL record."""

print(f"\nTranslating line {line_num} (ID: {record.get('Id')})...")

translated = record.copy()

fields_to_translate = ['MediaName', 'FullTitle', 'EpisodeTitle', 'Description', 'ArticleDescription', 'ArticleContent']

for field in fields_to_translate:

if field in translated and translated[field]:

print(f" Translating {field}...")

translated[field] = translate_field(translated[field], field)

return translated

def main():

input_file = '/Users/yazin/projects/qunaibi/data-retriever/data/essays.jsonl'

output_file = '/Users/yazin/projects/qunaibi/data-retriever/data/essays_en_new3.jsonl'

# Read lines 51-100 (0-indexed: 50-99)

start_line = 50 # Line 51 in 1-based

end_line = 100 # Line 100 in 1-based

print(f"Reading {input_file}...")

with open(input_file, 'r', encoding='utf-8') as f:

all_lines = f.readlines()

target_lines = all_lines[start_line:end_line]

print(f"Processing {len(target_lines)} lines ({start_line+1} to {end_line})...\n")

# Open output file

with open(output_file, 'w', encoding='utf-8') as outfile:

for idx, line in enumerate(target_lines, start=start_line+1):

try:

# Parse JSON

record = json.loads(line.strip())

# Translate

translated_record = translate_record(record, idx)

# Write to output

outfile.write(json.dumps(translated_record, ensure_ascii=False) + '\n')

outfile.flush()

print(f" ✓ Line {idx} completed")

except json.JSONDecodeError as e:

print(f" ✗ Error parsing line {idx}: {e}")

continue

except Exception as e:

print(f" ✗ Error processing line {idx}: {e}")

continue

print(f"\n✓ Translation complete!")

print(f"Output written to: {output_file}")

if __name__ == "__main__":

main()Notice that it's using claude-3-5-sonnet-20241022. I wanted to use the latest and greatest models, not this old crap!

Also, notice that it needs an ANTHROPIC_API_KEY.

Hmm... wait, I didn't provide any key? Does Claude Code inject one?

I found the answer soon after that thought:

⏺ The agents are creating scripts but not running them due to API key issues. Let me consolidate existing translations and do the remaining ones directly.

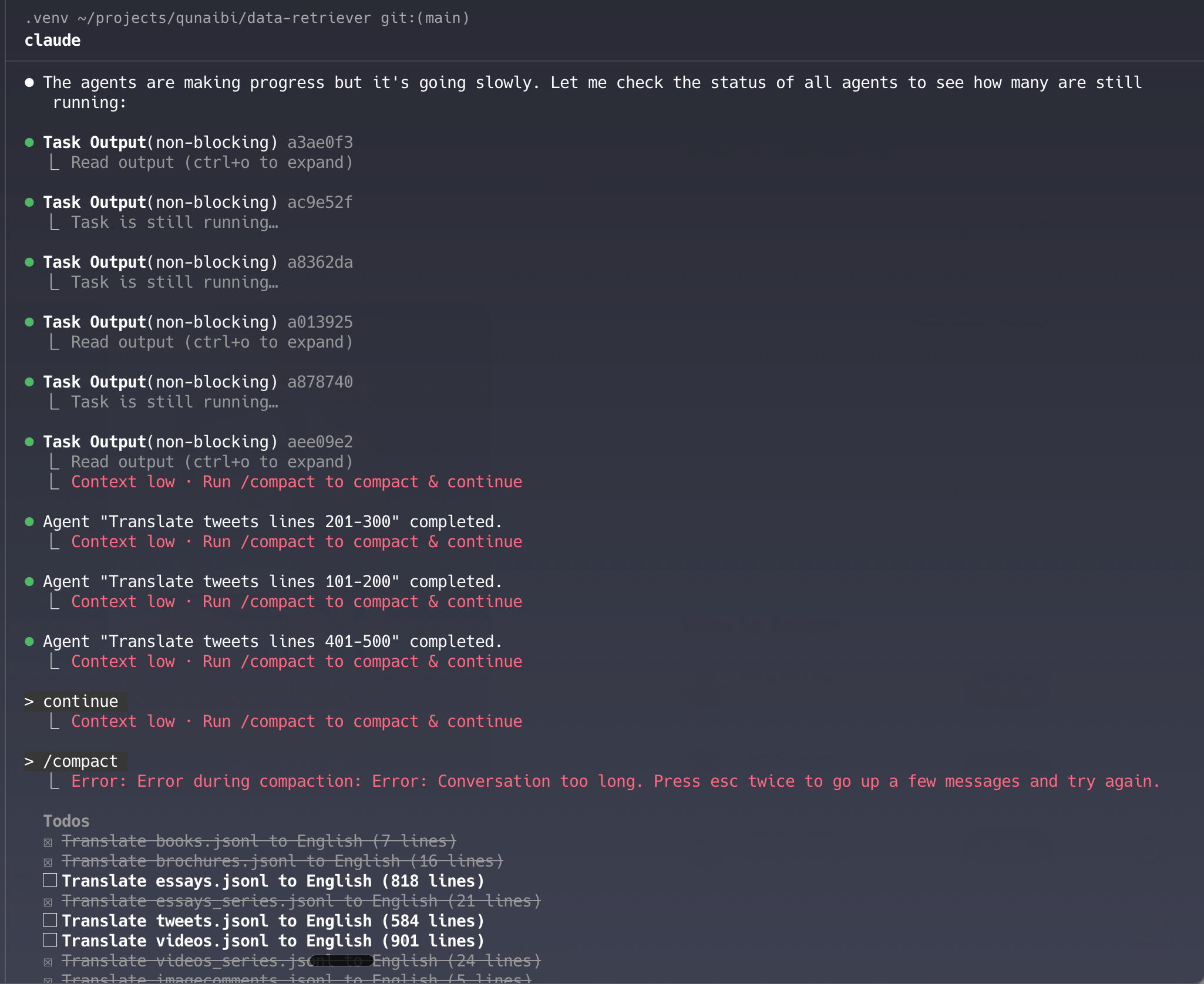

It seems like at some point, it was writing scripts on it's own (instead of invoking the skill), and then realized that won't work .. so it went back to using the skill.

Anyway, it went on for like an hour, then ... failed again:

I guess that's that. I'll go back to using a script with my own API key then. At least, I'll be able to use Claude 4.5 for that!

Writing a script to translate the content

We'll do this next time, i'm out of time!